Welcome !

I am Atmadeep Banerjee, and you have made it onto my personal website. Please take a look around.

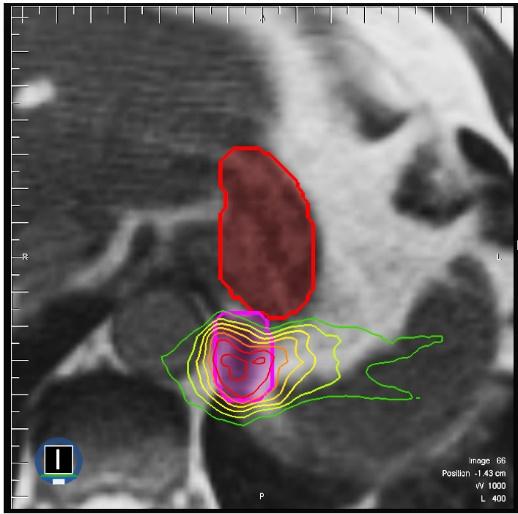

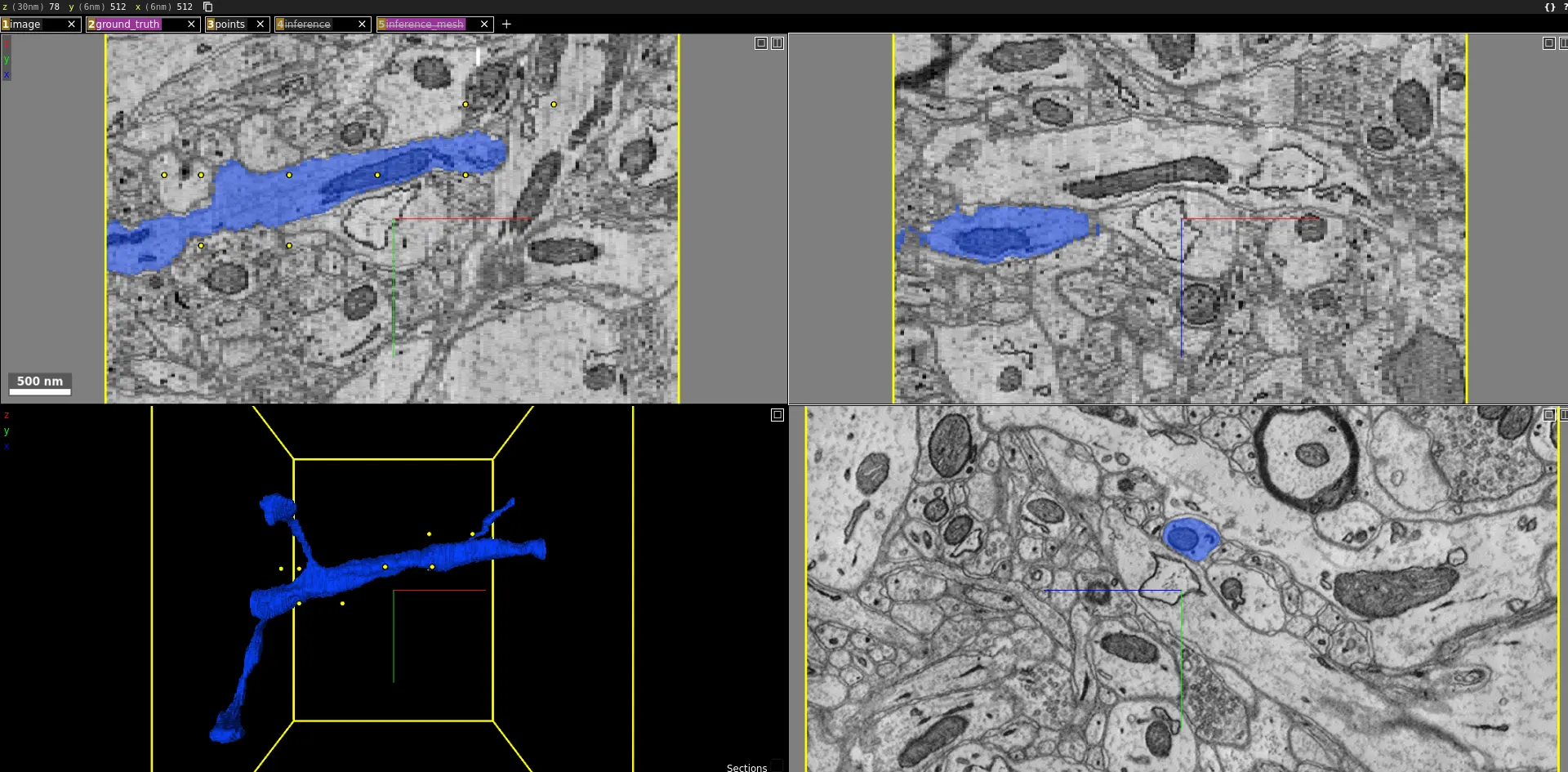

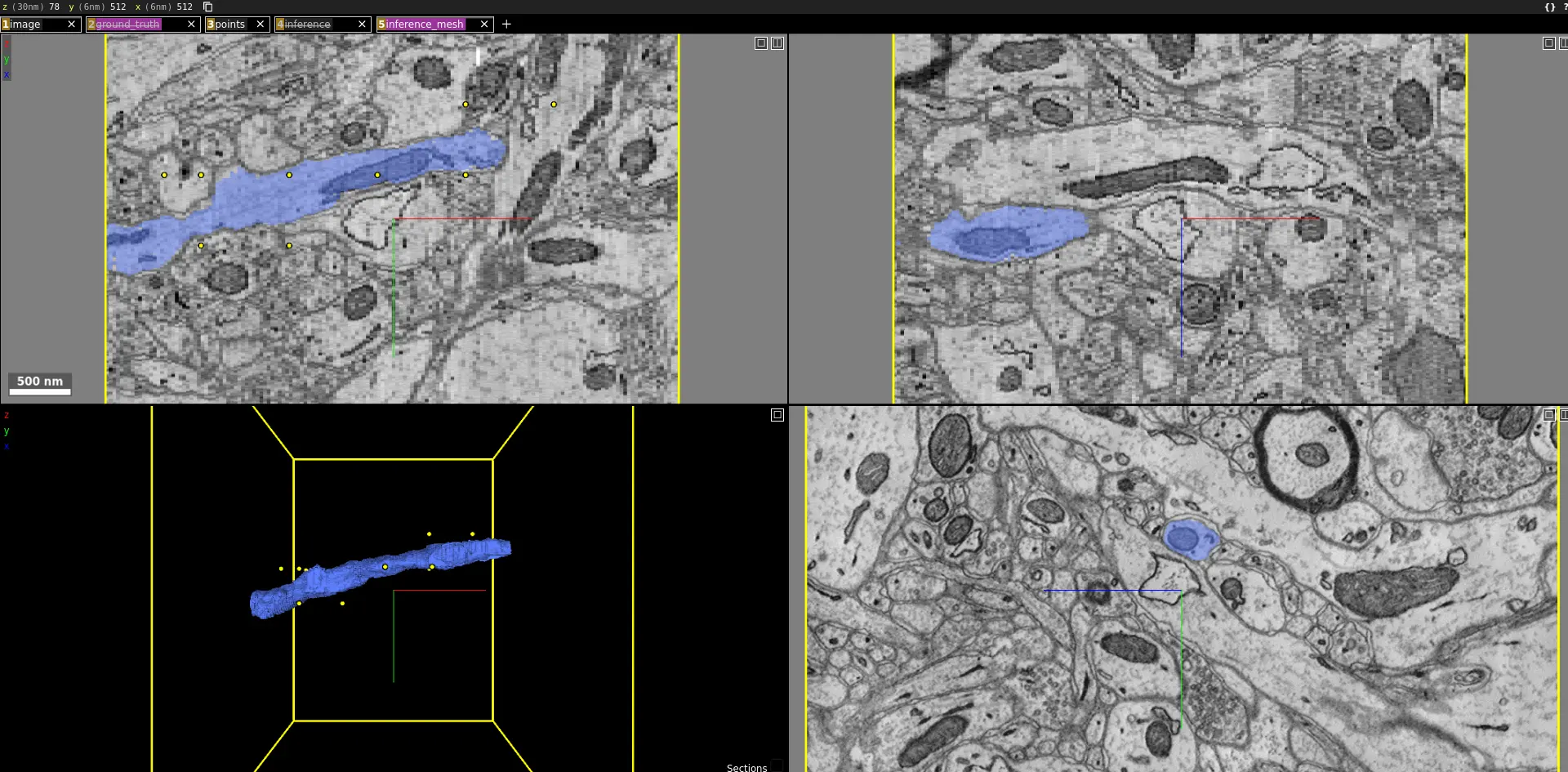

About me: I am a deep learning researcher, primarily interested in computer vision. My current research interests include generative AI and applications of deep learning in bio-medicine.

Over the years, I have leveraged AI to solve a broad set of problems spanning multiple domains like vision, natural language and speech. On the engineering side of things, I have worked at multiple startups to build their AI products and data pipelines.

I occasionally participate in Kaggle competitions and am currently ranked a Competitions Expert. I also (try to) maintain a blog where I share my insights on various AI-related topics. You can check it out here.

.png)